Like many programmers who hold degrees that are not even relevant to computer programming, I was struggling to learn coding by myself since 2019 in the hope to succeed in the job. As a self-taught developer, I’m more practical and goal-oriented about things that I’ve learned. This is why I like web scraping particularly, not only it has a wide variety of use cases such as product monitoring, social media monitoring, content aggregation, etc, but also it’s easy to pick up.

The essential idea of web scraping is to extract information snippets from the websites and export them into an easily readable format. If you’re a data-driven person, you will find great values in web scraping. Luckily, there are free web scraping tools available to capture web data automatically without coding.

Welcome to the CS0112 Fall 2020 course website. Here you will find information on course documents, assignments, lectures, notes, and general course information. Please reach out to the HTA’s or Doug to express any of your thoughts and concerns! Please fill the form below and send your information about the website which is going to be scraped by scrapipy team. After the form be sent, we do a short research about the case and send a proper proposal base on the dead line and your budget threshold. Web scraping is a website extraction technique that pulls vital information. Software programs that scrape the web usually simulate human exploration of the web by either implementing low-level Hypertext Transfer Protocol (HTTP) or embedding a full-fledged web browser, such as Internet Explorer, Google Chrome, or Mozilla Firefox.

The web context is more complex than we could imagine. Having said that, we need to put in time and effort to maintain the scraping work, not to mention massive scraping from multiple websites. On the flip side, scraping tools save us from writing up codes and endlessly maintaining work.

In this blog, we are going to implement a simple web crawler in python which will help us in scraping yahoo finance website. Some of the applications of scraping Yahoo finance data can be forecasting stock prices, predicting market sentiment towards a stock, gaining an investive edge and cryptocurrency trading. Web scraping is the process of gathering information from the Internet. Even copy-pasting the lyrics of your favorite song is a form of web scraping! However, the words “web scraping” usually refer to a process that involves automation. Some websites don’t like it when automatic scrapers gather their data, while others don’t mind.

To give you an idea of the pros and cons of python scraping and website scraping tools, I will walk you through the entire work of python. And then I will compare the process with a web scraping tool.

Without further ado, let’s get started:

Web scraping with Python

Project:

- website: Yelp.com

- Scraping content: business title, ratings, review counts, phone number, price range, address, neighborhood

You will find full coding here: https://github.com/whateversky/yelp

Prerequisite:

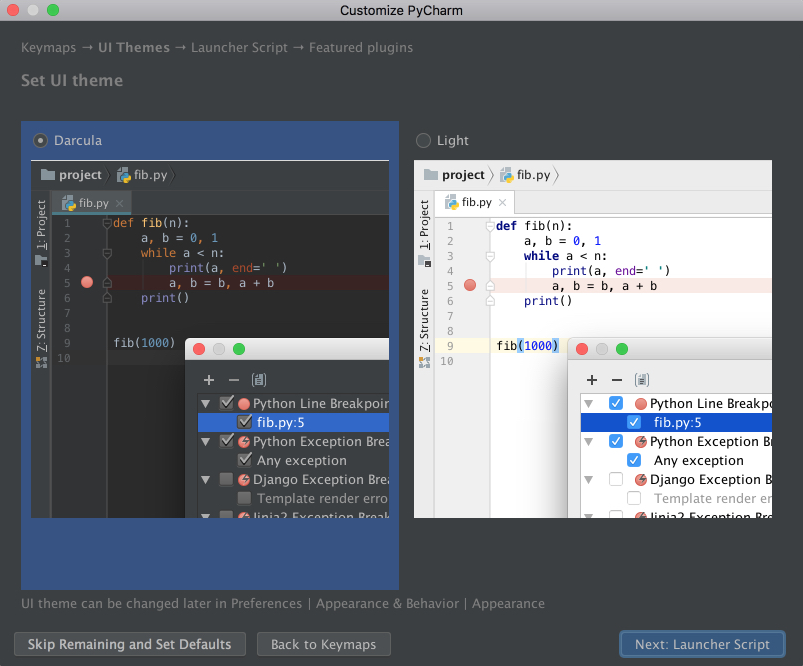

- Pycharm— for fast-checking and fixing the coding errors

- Bejson — cleaning the JSON structure format

The general scraping process will look like this:

- First, we create a spider to define how we will perform and extract data from Yelp. In other words, we send GET requests, and then set rules for scrapers to crawl the website.

- Then, we parse the web page content and return the dictionary with extracted data. Having said that, we tell the spider that it must return either an Item object or a Requested object.

- Finally, export extracted data returned from the spider.

I only focus on the spider and parser. However, we certainly need to understand web structures before data extraction. While coding, you will also find yourself constantly inspecting the webpage all the time to access the divs and classes. To inspect the website, go to your favorite browser and right-click. Choose “Inspect” and find the “XHR” tab under the Network. You will find corresponding listing information including store names, phone numbers, locations, and ratings. As we expand the “PaginationInfo”, it shows us that there are 30 listings on each page, and have a total number of 6932 listings. So by the end of this video, we should be able to get that many results. Now let’s head to the fun part:

Spider:

First, open Pycharm and set up a new project. Then set up a python file, and name it “yelp_spider”

Getting Page:

We create a get_page method. This will pass a query argument that contains all the listing web URLs and then returns the page JSON. Note that I also add a user-agent string to spoof the web server to bypass any scraper detection. We can just copy and paste the Request Headers. It is not necessary but you will find it useful most of the time if you tend to scrape a website repeatedly.

I add .format argument to format the urls so it returns an endpoint follows a pattern, in this case, all the listing pages from search result of “Bar in New York city”

def get_page(self, start_number):

url = “https://www.yelp.com/search/snippet?find_desc=bars&find_loc=New%20York%2C%20NY%2C%20United%20States&start={}&parent_request_id=dfcaae5fb7b44685&request_origin=user” .format(start_number)

Getting Detail:

We just successfully in harvesting the urls to the listing pages, we can now tell the scraper to visit each detail page using the get_detail method.

The detail page URL consists of a domain name and a path that indicates the business.

As we already gathered the listing urls, we can simply define the URL pattern which includes a path appended to https://www.yelp.com. This way it will return a list of detail page URLs

def get_detail(self, url_suffix): url = “https://www.yelp.com/” + path

Next, we still need to add a header to make the scraper look more human. It’s similar to a common etiquette for us to knock before entering.

Then I created a FOR loop combined with IF statements to locate the tags that we are going to get. In this case, the tags that contain the business name, rating, review, phone etc.

Unlike listing pages that will return JSON format, detail pages normally respond to us in HTML format. Therefore I stripaway the punctuations and extra spaces to make them look clean and neat while parsing.

Parsing:

As we visit those pages one by one, we can instruct our spider to obtain the detailed information by parsing the page.

First, create a second file called “yelp_parse.py” under the same folder. And start with import and execute YelpSpider.

Here I add a pagination loop since there are 30 listings split across multiple pages. The “start_number” is an offset value, which is “0” in this case. It increases numbers by 30 as we finish crawling the current page. In this manner, the logic will like this:

Get first 30 listings

Paginate

Get 31-60 listings

Paginate

Get 61-90 listings….

Last but not least, I create a dictionary to pair the key and values with respective data attributes including business name, rating, phone, price range, address, neighborhoods, and so forth.

Scraping with web scraping tool:

With python, we directly interact with the web server, portals, and source code. Ideally, this method would be more effective but involves programming. As the website is so versatile, we need to constantly edit the scraper and adapt to the changes. So do the Selenium and the Puppeteer, they’re close relatives but come with limitations compared to Python for large-scale extraction.

On the other hand, web scraping tools are more friendly. Let’s take Octoparse as an example:

Octoparse’s latest version OP 8.1 applies the Train Algorithm which detects the data attributes when the web page gets loaded. If you ever experienced the iPhone’s face unlock which applies Artificial Intelligence, “detection” is not a strange term to you.Likewise, Octoparse will automatically break down the web page and recognize various data attributes, for instance, business name, contacts information, reviews, locations, ratings, etc.

Take yelp as an example. Once the web page gets loaded, it parses the web element automatically and reads the data attributes automatically. Once the detection process gets done, we can see all the data that Octoparse captured for us from the preview section, nice and neat! Then You will notice the workflow has been created automatically. The workflow is like a scraping roadmap, and the scraper will follow the direction to capture the data.

We’ve created the same thing in the python section, but they were not visualized with clear statements and graphs like Octoparse. Programming is more logical and abstract which is not easy to conceptualize without a firm grounding in this field.

But that’s not all, we want to get information from detail pages. It’s an easy peasy. Just follow the guide from the tips panel and find “Collect web data on the page that follows”

Then choose title_url which can bring us to the detail page.

Once we confirm the command, a new step will add to the workflow automatically. Then the browser will display a detail page and we can click any data attribute within the page. For example, when we click the business title “ARDYN”, the tips guide will respond with a set of actions for us to choose from. Simply click the “Extract the text of the selected element” command, it will take care of the rest and add the action to the workflow. Similarly, repeat the above step to get “ratings”, “review counts”, “phone number”, “price range”, “address”.

Once we set all the things up, just execute the scraper upon confirmation.

Final thoughts: scraping using python vs. web scraping tools

They both can get you similar results but different in performance. With python, there is certainly a lot of groundwork that needs to take place before implementation. Whereas, scraping tools are a lot more friendly on many levels.

If you are new to the world of programming and want to explore the power of web scraping, nonetheless to say, a web scraping tool is a great starting point. As you set foot in the door of coding, there’re wider choices and combinations that I believe will spark new ideas and make things more effortless and easier.

- How to Write Articles that Can Attract The Most Backlinks - April 1, 2021

- How small business retailers regain their edge using web scraping - November 5, 2020

- Content Aggregation Business Secret Success Revealed - August 14, 2020

Hi guys,

Today I am sharing my experience and code of a simple web crawler of using scrapy to scraping web domain of

based on Pycharm IDE, restore data to MongoDB and finally deploy to Heroku Scheduler.

(1) Setup your Pycharm IDE environment

refer to this

Here’s my run/debug configuration. The project name is “caissSpider”

(2) The spider

There’s a lots of tutorials on Scrapy, one of the useful projects to the beginner is this.

Here’s my simple project’s structure.

Like’s study them briefly one by one.

2.1 items.py

As you can see, this item class is like the collection in database, which defines the fields of the data structure that scraping from the web. At here, all the fields are simply using String.

2.2 settings.py

2.2.1. This file defines the settings of the spider. Note you would better put

in order to avoid the Scrapy “ulopen error time out” errors.

2.2.2 Note for MONGODB_SERVER you have to setup the database username and database password for your MONGODB. Otherwise the Scrapy will show up “user is empty” error

2.2.3. Refer to this to set mongodb username and password

“This answer is for Mongo 3.2.1 Reference

Terminal 1:

Terminal 2:

if you want to add without roles (optional):

to check if authenticated or not:

it should give you:

2.2.4. For my spider, I store the data to “test” database in “news” collections.

2.3 pipeline.py

The pipeline.py is aiming to filter out the sensitive words, clean up the current collection before storing data and insert the new scraping data to the collection.

2.4 requirements.txt

These are the packages needed to deploy to the Heroku Scheduler.

2.5 scrapy.cfg

2.6 setup.py

I didn’t change anything from this for the above two files. Simple setup scripts.

2.7 caissSpider.py

Finally, let’s look at the core spider file.

2.7.1 Note the start_url contains the urls that we want to scrape from

2.7.2 parse(self, response) will keep scraping the web in the start_url but may not obey the sequence of the urls listed int eh start_url. Which is to say, the response of the “infoworld” may comes earlier than the “techcrunch”.

Thus, I am using simple arrays to maintain the sequence of the urls to compare, which includes the “xpathSel”, “compareUrls”, “sites”, “domains”

2.7.3 For each web in the start_url, the spider will scrape “GRABNO”‘s response, which is 3 here.

2.7.4 xPath

xpath is an easy to learn web/xml path selector. While I am not an expert of xpath, but here ‘s some tips that I can share.

1) “/” indicates the next node near its parent

2) “//”select the next node no matter where it is from the parent

3) “[@class=]” is useful if “//../” doesn’t work. For example,

When “//h2/a/text()” doesn’t work, you should try this,

4) “text()” to grap text and “@href” to scrape url. Remember to put “/” before them in some cases.

5) If we try to scrape the node whose class has partial words, use “[contains(@class, “<some words>”)]”, instead of “[@class=”<full words>”]”

To conclude, xpath is not hard to learn. We can figure it out quickly if we scrape something, print it and analyze it.

(3) Results in the MongoDB

The fields of the items saved in the MongoDB are exactly same as the documents defined in the “item.py”

(4) Commands

To run the spider, there are three options, among which the later two will drop the output files.

My .json file result

(5) Heroku Deploy

We could easily setup a Heroku Scheduler like the following image illustrated freely. As a web-spider will generate lots of throughput and the AWS is charging by the it, deploying on the Heroku will save some bucks if your spider is running once per day.

First, we need to create a Procfile to notify Heroku the command to run this Application. So create a Procfile and put the following command in it.

Then we can set a free Scheduler in Heroku.

Pycharm Tutorial Python

The scheduler will run it daily at 0am an push the results to your database set before.

(6) Post to your website

Congratulations! You now know how to write a web spider and deploy as a product. Enjoy the Scrapy and xPath now!

Pycharm Download For Windows

Reference: